This article reviews the current status of large model research in 2023. It summarizes the various stages of large model development this year and the contributions of major research institutions to large model research. It also summarizes and summarizes the current mainstream types of large models. On this basis, some thoughts and prospects about the future development of large-model technology were made.

The main contents of this article include::

Current status of basic model research

Introduction to LLM model types

Summary and future directions

Current status of basic model research

In 2023, with the development of LLM technology, the open source models of Chinese model research institutions will usher in explosive growth:

In March 2023, Zhipu AI first released the ChatGLM-6B series in the Magic Community. ChatGLM-6B is an open source dialogue language model that supports Chinese and English bilingual question and answer. It is based on the General Language Model (GLM) architecture and has 62 billion parameters. Combined with model quantization technology, users can deploy it locally on consumer-grade graphics cards (a minimum of 6GB of video memory is required at the INT4 quantization level). Now, Zhipu AI's ChatGLM-6B has been updated to the third generation. At the same time, it has launched the CogVLM series in multi-modal mode, as well as CogVLM that supports visual agents, and launched the CodeGeex series models in the code field, while exploring both agent and math. and open source models and technologies.

In June 2023, Baichuan first released the Baichuan-7B model in the Moda community. baichuan-7B is an open source large-scale pre-training model developed by Baichuan Intelligence. Based on the Transformer structure, the 7 billion parameter model is trained on approximately 1.2 trillion tokens, supports Chinese and English bilinguals, and has a context window length of 4096. Baichuan is also one of the first companies to launch pre-trained models, and jokingly claims to provide developers with better “rough houses” so that developers can better “decorate” them, thus promoting the development of domestic pre-trained base models. Subsequently, Baichuan released the 13B model and Baichuan 2 series models, with both open source base and chat versions simultaneously.

In July 2023, at the opening ceremony of WAIC 2023 and the Scientific Frontier Plenary Meeting, the Shanghai Artificial Intelligence Laboratory teamed up with a number of institutions to release a newly upgraded "Scholar General Large Model System", including Scholar·Multimodal, Scholar·Puyu He Shusheng·Tianji and other three basic models, as well as the first full-chain open source system for the development and application of large models. Shanghai Artificial Intelligence Laboratory not only makes model weights open source, but also conducts all-round open source at the model, data, tools and evaluation levels to promote technological innovation and industrial progress. Subsequently, the Shanghai Artificial Intelligence Laboratory successively released the Scholar·Puyu 20B model and the Scholar·Lingbi multi-modal model.

In August 2023, Alibaba open sourced the Tongyi Qianwen 7B model, and subsequently open sourced the 1.8B, 14B, and 72B base and chat models, and provided the corresponding quantized versions of int4 and int8, which can be used in multi-modal scenarios. , Qianwen has also open sourced two visual and speech multi-modal models, qwen-vl and qwen-audio, achieving "full-size, full-modality" open source. Qwen-72B has improved the size and performance of open source large models. Since its release, it has remained at the top of the major charts, filling a gap in the country. Based on Qwen-72B, large and medium-sized enterprises can develop commercial applications, and universities and research institutes can carry out scientific research such as AI for Science.

In October 2023, Kunlun Wanwei released the "Tiangong" Skywork-13B series of tens of billions of large language models, and rare open sourced a large high-quality open source Chinese data set of 600GB and 150B Tokens Skypile/Chinese-Web-Text -150B dataset. High-quality data filtered from Chinese web pages by Kunlun's carefully filtered data processing process. The size is approximately 600GB, and the total number of tokens is approximately (150 billion). It is currently one of the largest open source Chinese data sets.

In November 2023, 01-AI company released the Yi series of models, with parameter sizes ranging from 6 billion to 34 billion, and the amount of training data reaching 30 billion tokens. These models outperform previous models on public leaderboards such as the Open LLM leaderboard and on some challenging benchmarks such as Skill-Mix.

Introduction to LLM model types

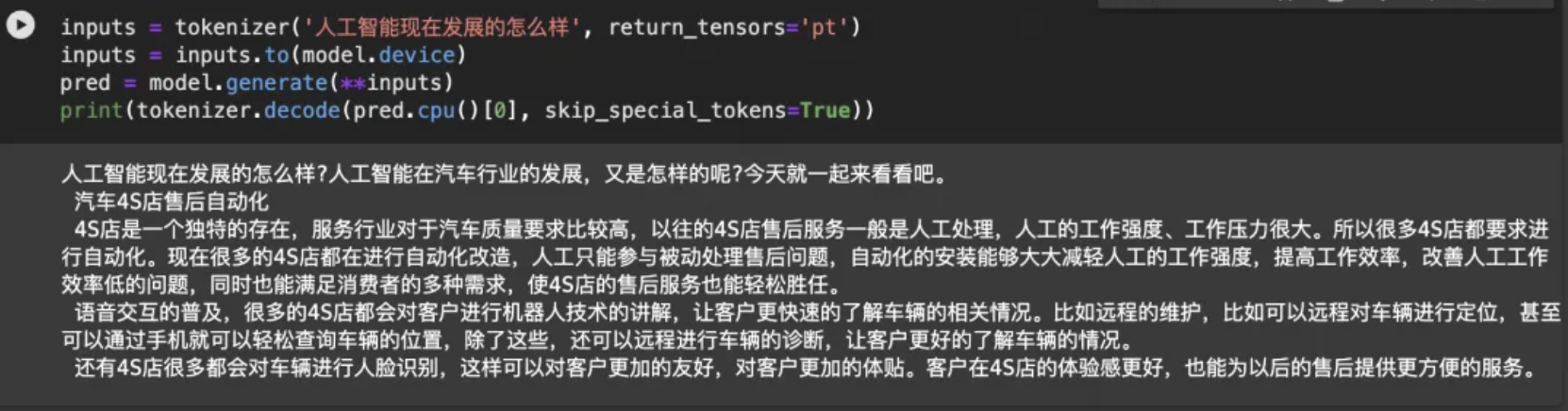

Base model and Chat model

We usually see a model research and development institution open source the base model and chat model. So what is the difference between the base model and the chat model?

First, all large language models (LLMs) work by taking in some text and predicting the text that is most likely to follow it.

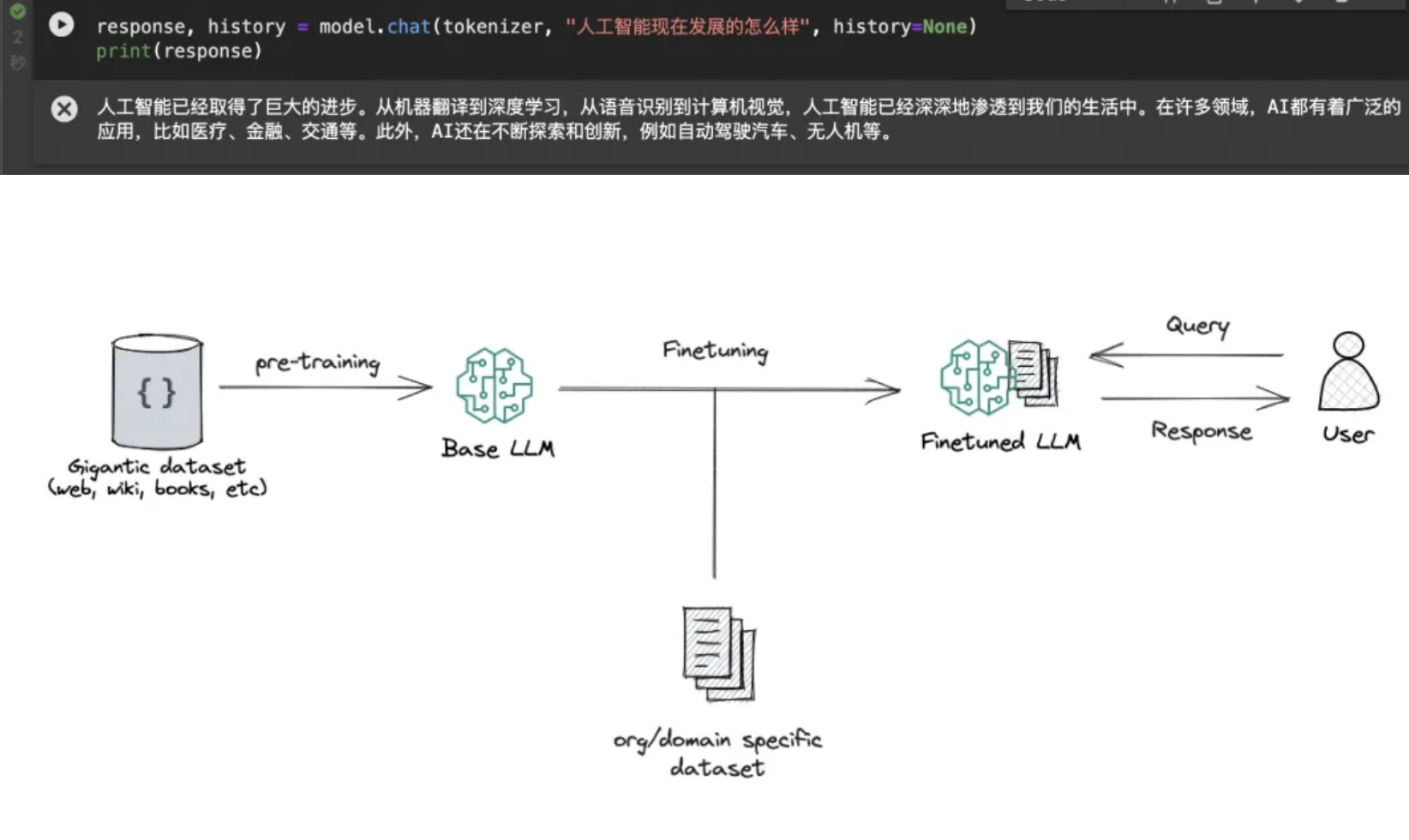

- The base model, also known as the basic model, is a model trained on massive amounts of different texts to predict subsequent texts. Subsequent text is not necessarily a response to instructions and dialogue.

- The chat model, also known as the dialogue model, is based on the base and continues to do fine-tuning and reinforcement learning through conversation records (instructions-responses). When it accepts instructions and talks with the user, it continues to write assistants that follow the instructions and are expected by humans. response content

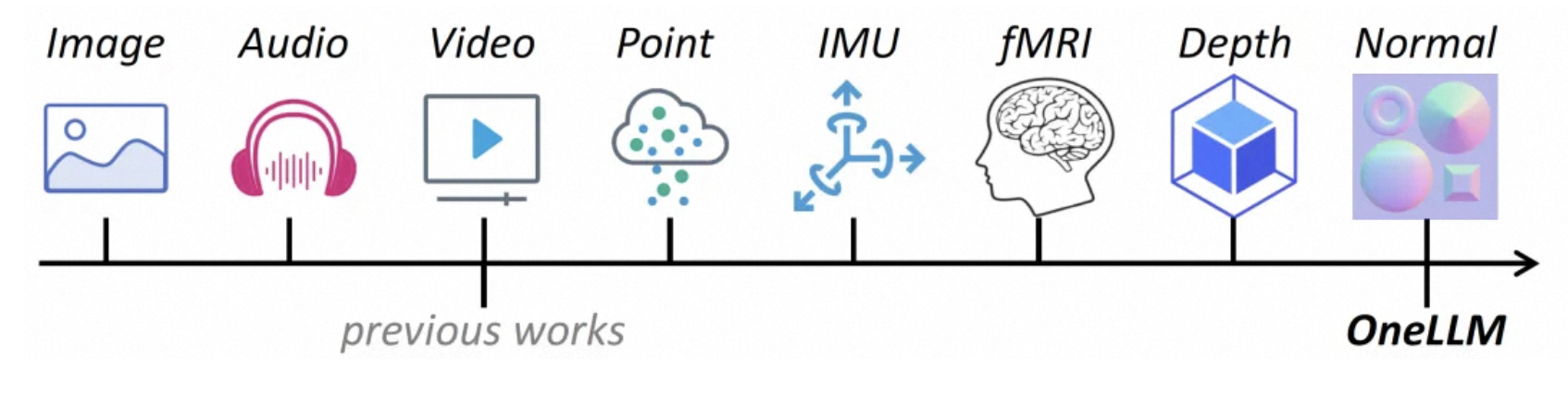

multimodal model

Multimodal LLM combines information from text and other modalities, such as images, videos, audios, and other sensory data. Multimodal LLM is trained on multiple types of data, helping the transformer find the differences between different modalities. relationship, and complete some tasks that the new LLM cannot complete, such as picture description, music interpretation, video understanding, etc.

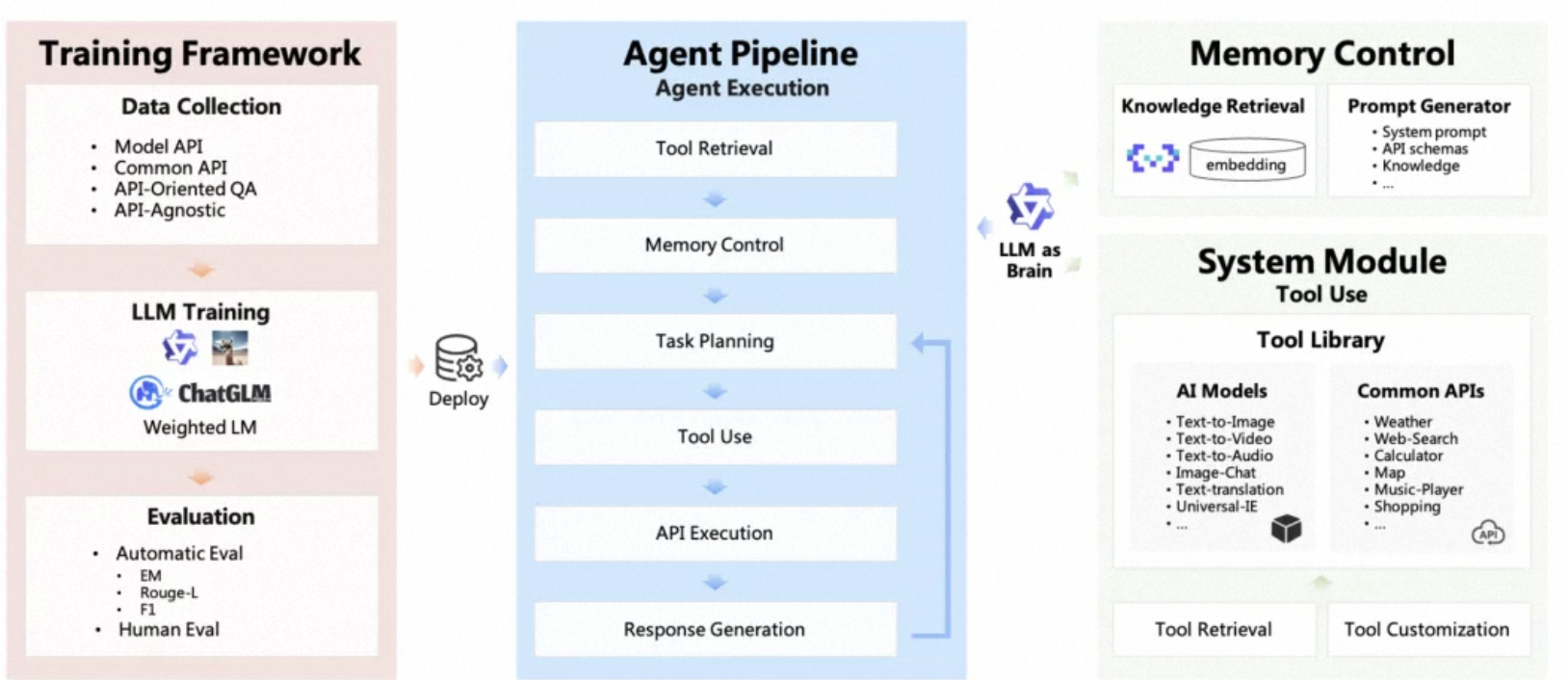

Agent model

LLM has the capabilities of an agent brain and collaborates with several key components, including,

Planning: dismantling sub-goals, correcting errors, reflecting and improving.

Memory: short-term memory (context, long window), long-term memory (implemented through search or vector engine)

Tool use: The model learns to call external APIs to obtain additional capabilities.

Code model

The Code model adds more code data to the model's pre-training and SFT, and performs a series of tasks in the code, such as code completion, code error correction, and zero-sample completion of programming task instructions. At the same time, according to different code languages, there will also be more professional language code models such as python and java.

Summary and future directions

Theory and principles::In order to understand the basic working mechanism of LLM, one of the biggest mysteries is how information is distributed, organized and utilized through very large deep neural networks. It is important to reveal the underlying principles or elements that build the basis of LLMs' capabilities. In particular, scaling appears to play an important role in improving the capabilities of LLMs. Existing research has shown that when the parameter size of a language model increases to a critical point (such as 10B), some emerging capabilities will appear in an unexpected way (sudden leap in performance), typically including context learning, instruction following and Step-by-step reasoning. These “emergent” abilities are fascinating but also puzzling: when and how do LLMs acquire them? Some recent research has either conducted broad-scale experiments investigating the effects of emerging abilities and the enablers of those abilities, or used existing theoretical frameworks to explain specific abilities. An insightful technical post targeting the GPT family of models also deals specifically with this topic, however more formal theories and principles to understand, describe and explain the capabilities or behavior of LLMs are still lacking. Since emergent capabilities have close similarities to phase transitions in nature, interdisciplinary theories or principles (such as whether LLMs can be considered some kind of complex systems) may be helpful in explaining and understanding the behavior of LLMs. These fundamental questions deserve exploration by the research community and are important for developing the next generation of LLMs.

Model architecture:Transformers, consisting of stacked multi-head self-attention layers, have become a common architecture for building LLMs due to their scalability and effectiveness. Various strategies have been proposed to improve the performance of this architecture, such as neural network configuration and scalable parallel training (see discussion in Section 4.2.2). In order to further improve the capacity of the model (such as multi-turn dialogue capability), existing LLMs usually maintain a long context length. For example, GPT-4-32k has an extremely large context length of 32768 tokens. Therefore, a practical consideration is to reduce the time complexity (primitive quadratic cost) incurred by standard self-attention mechanisms.

Furthermore, it will be important to study the impact of more efficient Transformer variants on building LLMs, such as sparse attention has been used for GPT-3. Catastrophic forgetting has also been a challenge for neural networks, which has also negatively impacted LLMs. When LLMs are tuned with new data, the previously learned knowledge is likely to be destroyed. For example, fine-tuning LLMs for some specific tasks will affect their general capabilities. A similar situation occurs when LLMs are aligned with human values, which is called an alignment tax. Therefore, it is necessary to consider extending the existing architecture with more flexible mechanisms or modules to effectively support data updates and task specialization.